What is a Load Balancer And Why it is Used?

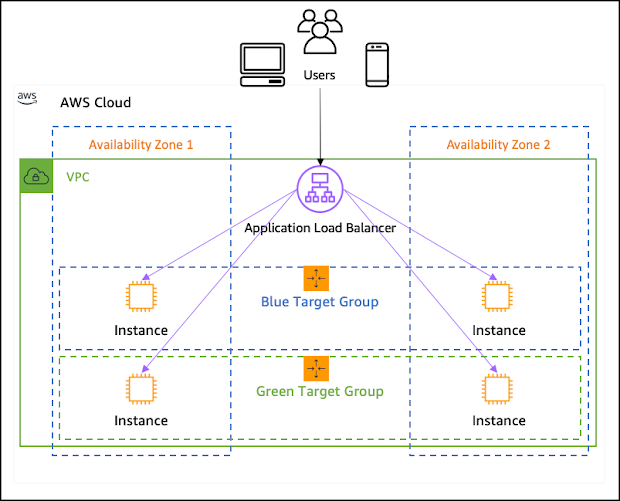

The method of dispersing computational workloads among two or more computers is known as load balancing. Load balancing is a technique used on the Internet to distribute network traffic among multiple servers. This decreases the load on each server and increases its efficiency, improving performance and lowering latency. Most Internet applications require load balancing to work correctly.

The load balancer determines which servers can handle traffic as a business meets the demand for its applications. This ensures a positive user experience. Load balancers control the flow of data between a server and a client device (PC, laptop, tablet, or smartphone). The server may be located on-premises, in a data center, or in the public cloud. The load balancer assists servers in efficiently moving data, maximizing the use of application delivery resources, and avoiding server overloads.

Load balancers examine the health of servers regularly to guarantee they can handle requests. The load balancer removes sick servers from the pool if necessary until they are recovered. To deal with rising demand, some load balancers even initiate the development of new virtualized application servers.

Load balancers are often implemented as hardware appliances. Nonetheless, they are becoming more and more software-defined. This is why load balancers are such an important component of a company's digital strategy.

How does it work?

Load balancers monitor the health of backend resources and divert traffic away from servers that can't handle requests. A load balancer distributes traffic to different web servers in the resource pool. It guarantees that no single server becomes overworked and thus unreliable, regardless of whether it is hardware or software or what algorithm it employs. It effectively reduces the time it takes for a server to respond and increases throughput.

A load balancer's duty is sometimes compared to that of a traffic cop, as it is designed to route requests to the appropriate locations at any given time, avoiding costly bottlenecks and unplanned accidents. Load balancers should, in the end, provide the performance and security required to support complex IT environments and the complex operations that occur within them.

Load balancing is the most scalable way of dealing with the large number of requests generated by today's multi-app, multi-device workflows. Load balancing, in conjunction with systems that offer seamless access to the multiple applications and desktops within today's digital workspaces, helps employees have a more consistent and reliable end-user experience.

Its uses

Load balancing is capable of many things. Predictive analytics, for example, allows software load balancers to predict traffic bottlenecks before they occur. This, software load balancer provides significant data to a business. These are vital for automation and can benefit commercial decision-making.

In the seven-layer Open System Interconnection (OSI) model, network firewalls are found at levels one through three (L1-Physical Wiring, L2-Data Link, and L3-Network). The layers between four and seven are responsible for load balancing (L4-Transport, L5-Session, L6-Presentation, and L7-Application).

The following are some of the functions of load balancers:

• L4 — directs traffic based on network and transport layer protocol data, such as IP address and TCP port.

• L7 — improves load balance by allowing content switching. This enables routing decisions to be made based on HTTP headers, consistent resource identifiers, SSL session IDs, and HTML form data.

• Global Server Load Balancing (GSLB) extends L4 and L7 capabilities to servers throughout the globe.

Common Load Balancing Algorithms

To determine how requests are spread over the server farm, a load balancer (or the ADC that incorporates it) will use an algorithm. There are numerous alternatives available, ranging from simple to complex.

Some of them are as follows:

• Round robin is a simple mechanism for ensuring that each client request is routed to a different virtual server from a rotating list. It's simple to construct for load balancers, but it ignores the load on a server already. A server may get overloaded if it receives a large number of processor-intensive queries.

• The least response time method is based on a server's response time to a health monitoring request. The response time indicates how busy the server is and how the user should expect to be treated.

• The least connection method takes into account the present demand on a server and, as a result, it usually provides better performance. Requests will be sent to the virtual server with the fewest active connections if the least connection technique is used.

• The load balancer can query the load on individual servers via SNMP using the custom load method. The administrator can specify the server load they want to query (CPU use, memory, and response time) and then mix them to meet their needs.

Conclusion

IT departments can use an ADC with load balancing features to assure scalability and availability of services. Its extensive traffic management capabilities can assist a firm in efficiently routing requests to the appropriate resources for each end-user. An ADC can provide a single point of management for protecting, controlling, and monitoring the many applications and services across environments, as well as guaranteeing the optimum end-user experience.

Comments

Post a Comment